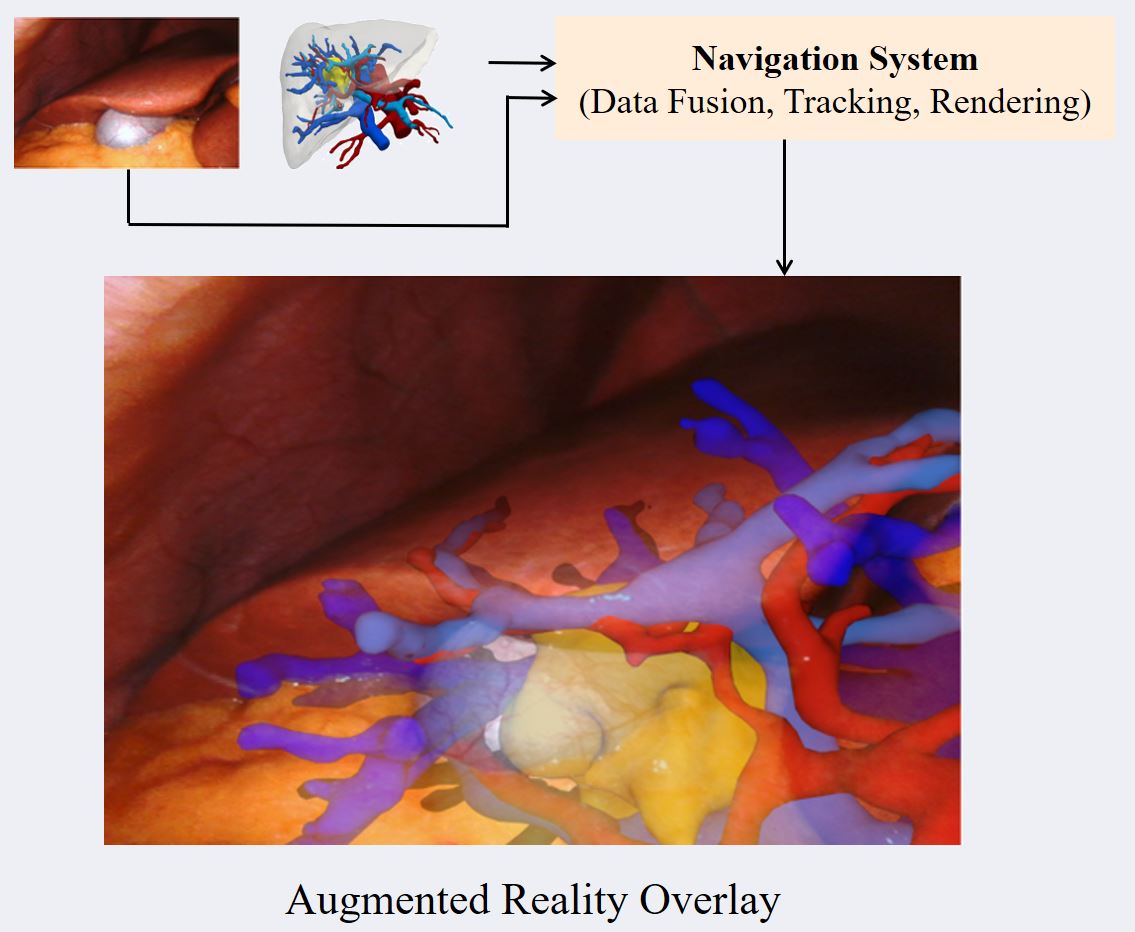

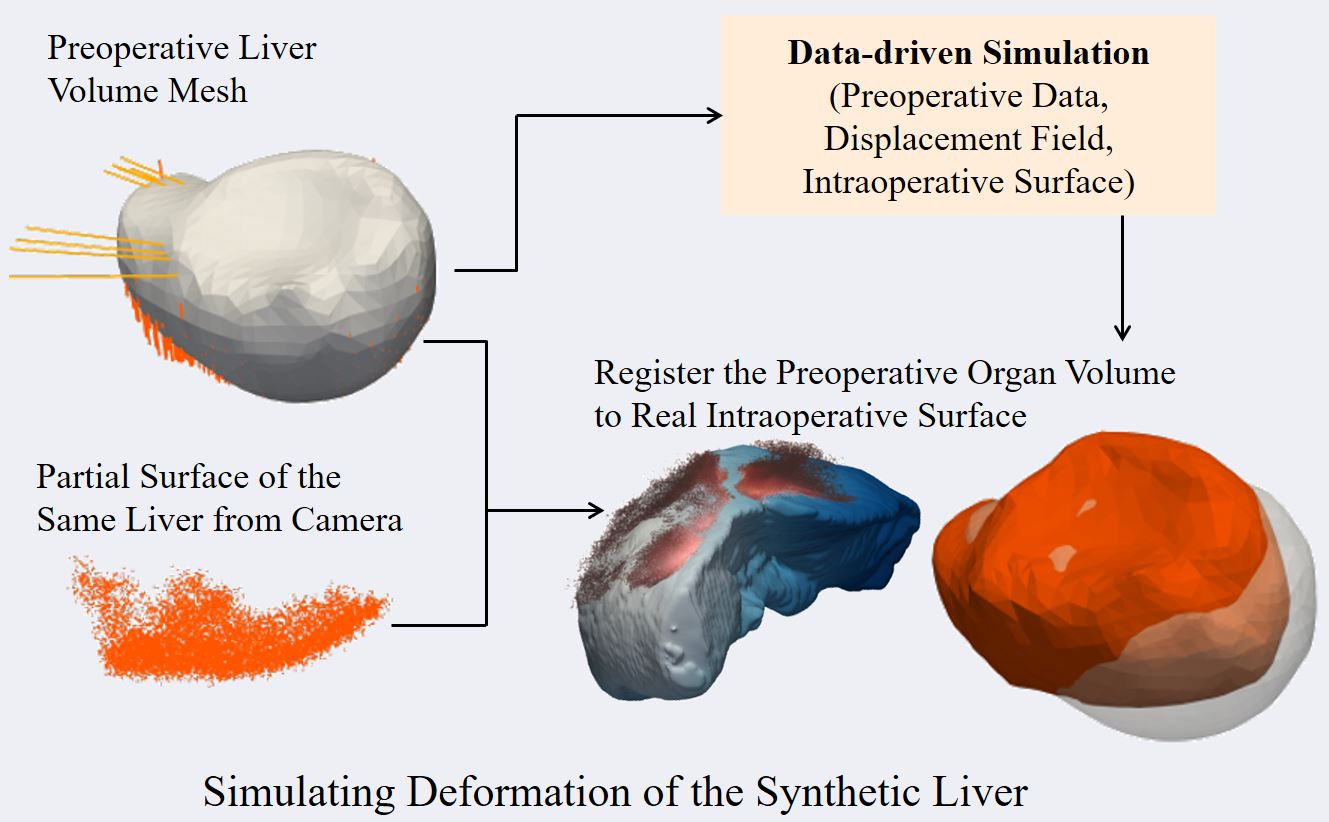

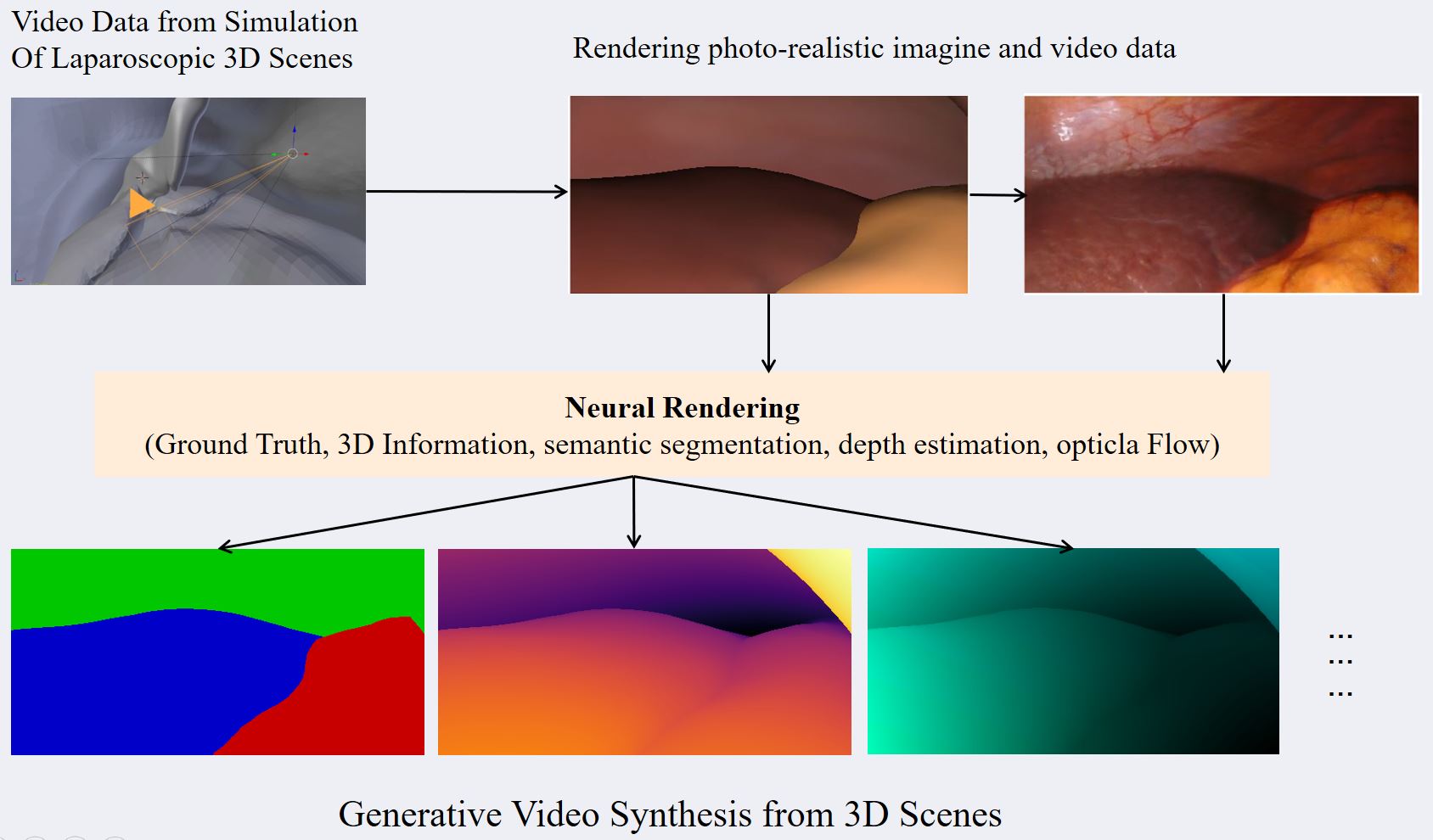

Computer Assisted Navigation (CAN) in tumor surgical treatment is a rapidly developing research field. The CAN systems enable surgeons to accurately identify and remove tumors while minimizing damage to surrounding healthy tissue to improve oncological and functional outcomes. Our research in this field focuses on improving the speed, accuracy, and precision of soft-tissue navigation systems, as well as developing new methods of generating real and synthetic datasets necessary for training and integrating the obtained ground truth data into not only the intraoperative but also the surgical planning processes. We focus on a wide spectrum of research questions in the field, from machine learning aspects that can improve surgical systems to aspects of clinical intraoperative applications, all in the pursuit of our goal of better-personalized patient outcomes and a higher quality of life for those affected by cancer.

We are researching methods, developing algorithms, and creating datasets for:

- 3D Reconstruction, Depth Estimation, Camera Calibration

- Intraoperative Segmentation

- Rigid and Non-Rigid Registration

- Soft-Tissue Modeling

- User-Interaction & User-Intervention

- Augmented Reality & User-Interfaces