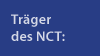

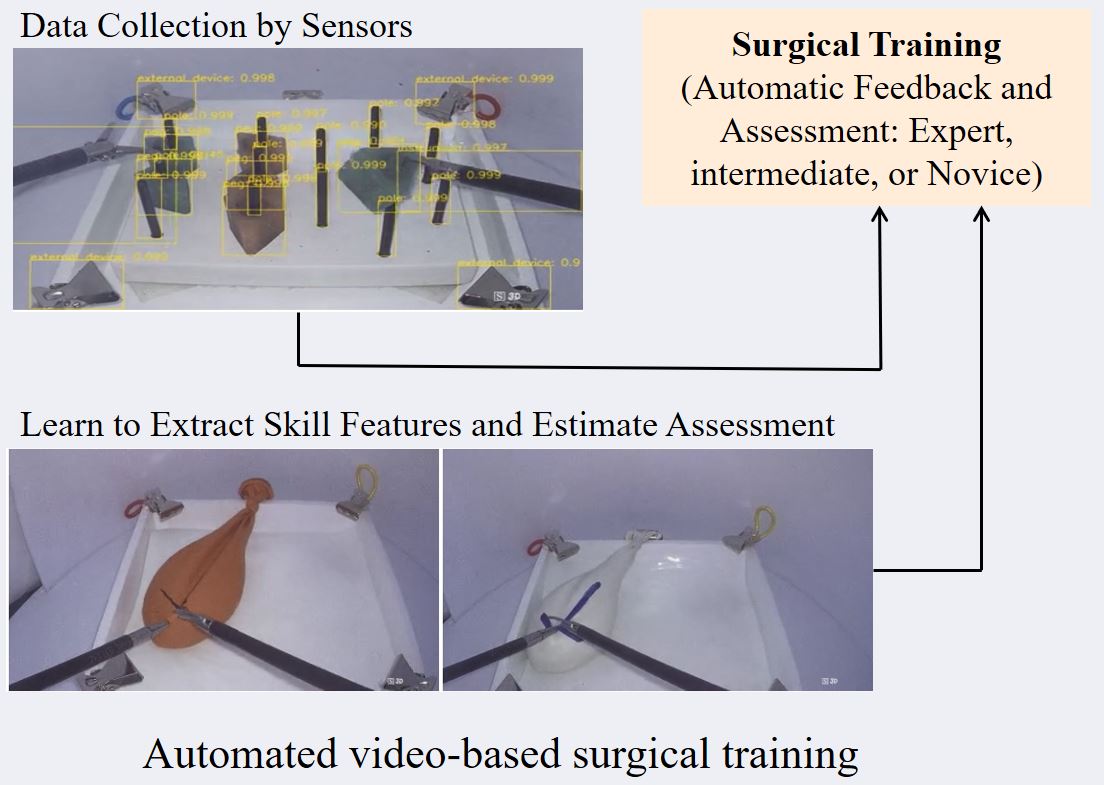

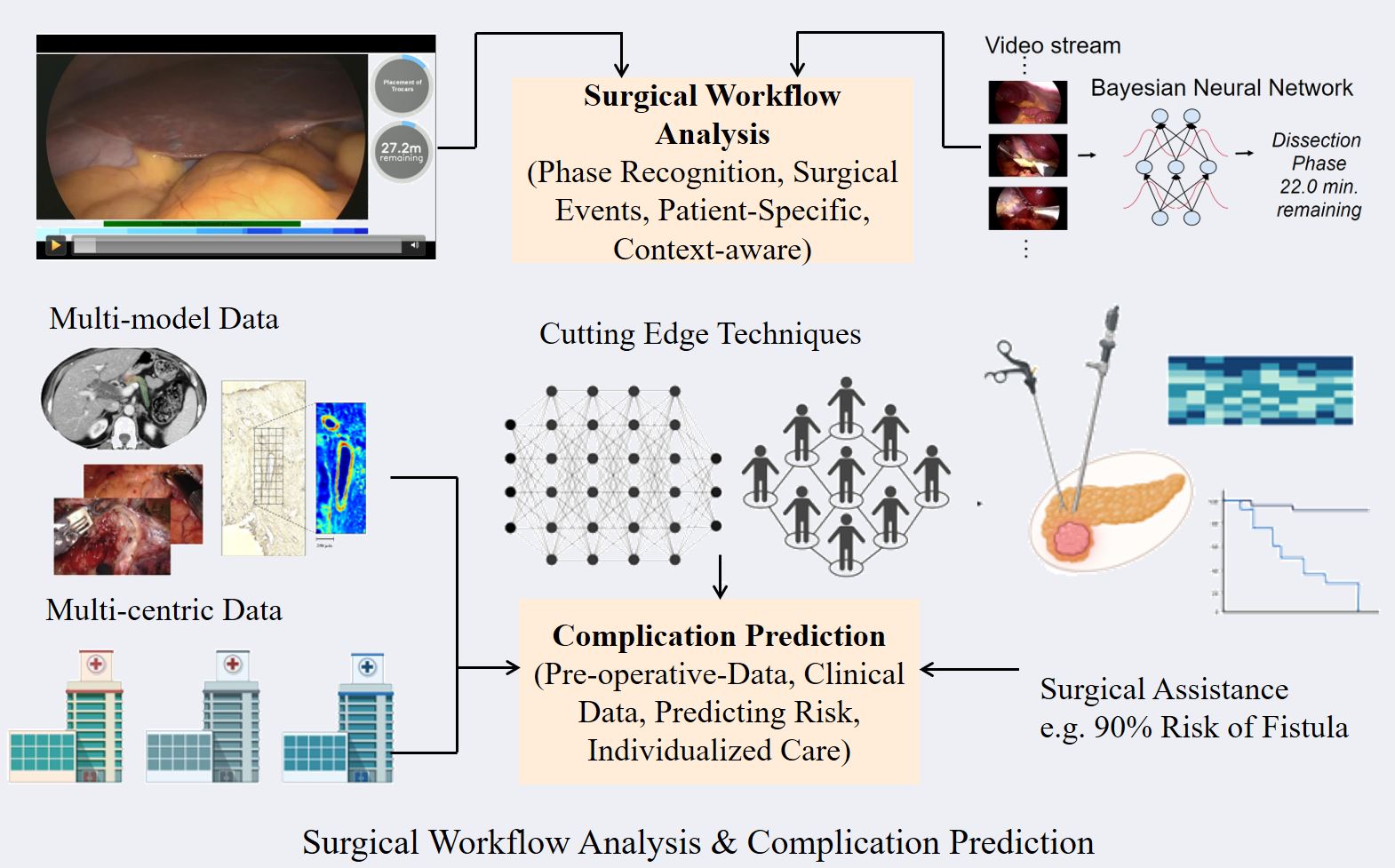

Data-driven surgical analytics and robotics involve the use of machine learning, data analytics, and robotics to improve surgical outcomes and enhance the capabilities of surgical systems. The goal of our research in this field is to bridge the current gap between robotics, novel sensors, and artificial intelligence to provide assistance along the surgical treatment path by quantifying surgical expertise and making it accessible to machines in order to improve patient outcomes. To achieve the goal, we leverage our full regional advantage by working very closely in an interdisciplinary setting with different partners, in particular surgical experts, relying on the existing infrastructure (including a fully equipped experimental OR and simulation room as well as imaging platforms).

Our research of data-driven surgical analytics involves the collection and analysis of large amounts of surgical data, such as surgical images, videos, and patient records, that is then used to develop predictive models, identify patterns and trends, and optimize surgical workflows. On the robotics side, the key areas of our research include surgical workflow optimization, autonomous surgery, robotic guidance and assistance, surgical training and simulation, as well as novel human-machine interaction concepts.